Using AI systems like Claude and ChatGPT for code recovery

Recently, there has been an increasing number of articles and materials on the topic of code recovery using artificial intelligence (AI) technologies. This has led to many questions from users about how effective this method is, how applicable it can be for any reverse engineering tasks or source code recovery from compiled exe files of programs, as well as dll and ocx libraries. In this article, I will try to provide a comprehensive answer to these questions.

Where it all started

Some time ago on Reddit, an article appeared about how one member of the community recovered a game developed 27 years ago. This file was developed using an extremely outdated version of Visual Basic 4.0 from today's perspective. Even our decompiler, VB Decompiler, which has been in development since 2005, does not support it as old and outdated language. After reading the post, which you can easily find by searching for its title "I Uploaded a 27-Year-Old EXE File to Claude 3.7 and What Happened Next Blew My Mind", it may seem that the future has already arrived. The fact that there is now a possibility of recovering source code from machine code simply by communicating with a chatbot and slightly adjusting its responses, guiding the AI model's thoughts in the right direction.

Realities of AI decompilation

Unfortunately, after a detailed analysis of the author's post, some details emerge: inside his exe file there was a help text that thoroughly described how the game worked. Additionally, within the program there was numerous other textual information, such as error messages and informational messages, etc. As it turns out later, Claude did not decompile the code but rather, guided by the help and strings found in the program, analyzed how this program "ideally" should work and wrote an equivalent in Python. In other words, AI used the textual strings found within the program as instructions for writing a similar program from scratch, without actually doing any decompilation.

Limitations of AI-based code analysis

The main drawback of this approach is that not all programs (if not to say, none) contain executable code with an internal text description of how the code works. Even if your program contained numerous useful comments before compilation, they will never be included in the resulting EXE file, as the compiler removes them during the compilation process. The only chance for documentation to make it into the EXE file is if you manually place it on a specific form (dialog box) or output it through the console if the program has no GUI interface. These are rare exceptions when examining real files.

Another problem with this approach is that the actual code is not decompiled, but a new one is created based solely on the strings found by AI and conclusions about how the program might work. In other words, if your code contains any unique algorithms, they will not be recovered. Moreover, the code generated through this method will never match the original in any way. It will be a completely new code that simply tries to solve a problem similar to yours, based solely on the data that the AI model was trained on.

In simpler terms, if instead of trying such "decompilation" efforts, you write a text description of your program explaining how it should work and then ask ChatGPT or Claude to write something similar in the desired language, the result may be even better. In both cases, you will receive completely new code that is not at all similar to yours. This code will not use the algorithms you devised nor be based on your know-how. You will get an absolutely new code that does something similar but different from what your program did. And success in this approach will only be possible if the AI model was initially trained on such algorithms. Unfortunately, yes, it is exactly like that. If your code contained any proprietary algorithms representing trade secrets, the AI model would never recover them. It simply doesn't restore code as we typically understand it. Instead, it writes new code from scratch while trying to solve your problem using its own experience rather than yours.

Features of decompiling Visual Basic 5.0 and 6.0 applications

What are the features of earlier versions of Visual Basic under MS-DOS and early versions of Windows 95? They lack classes, function scope, and not support for tens of thousands of third-party ActiveX components in forms (dialog windows). Most importantly, they do not extensively use the COM OLE model for working with external libraries and components.

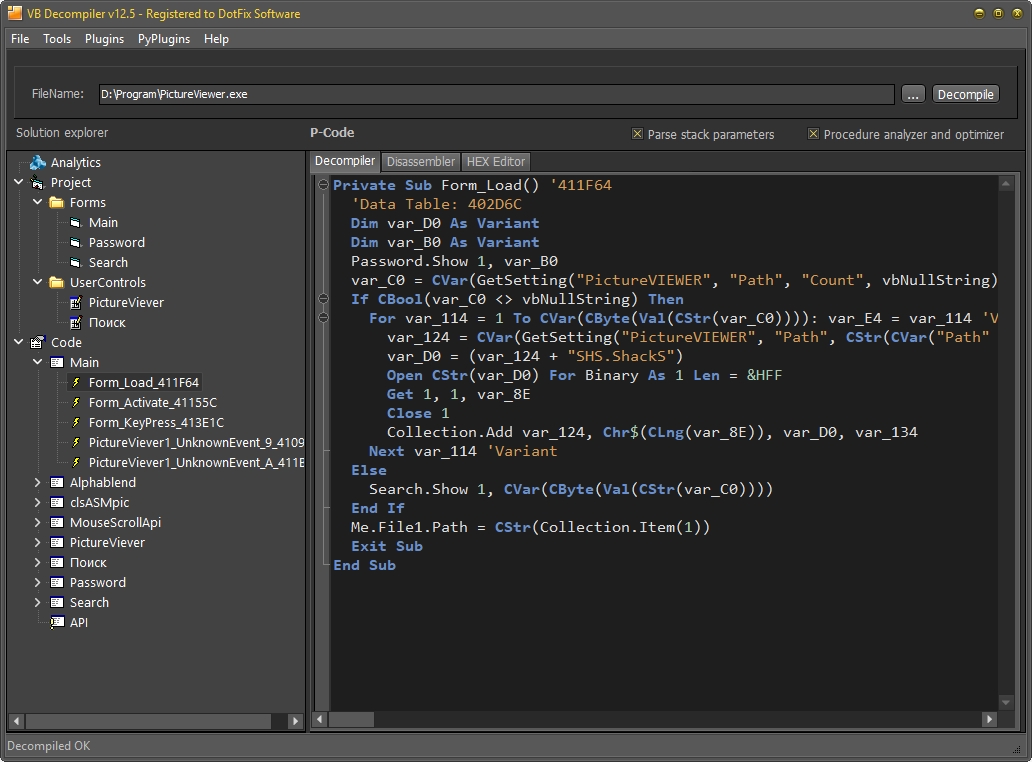

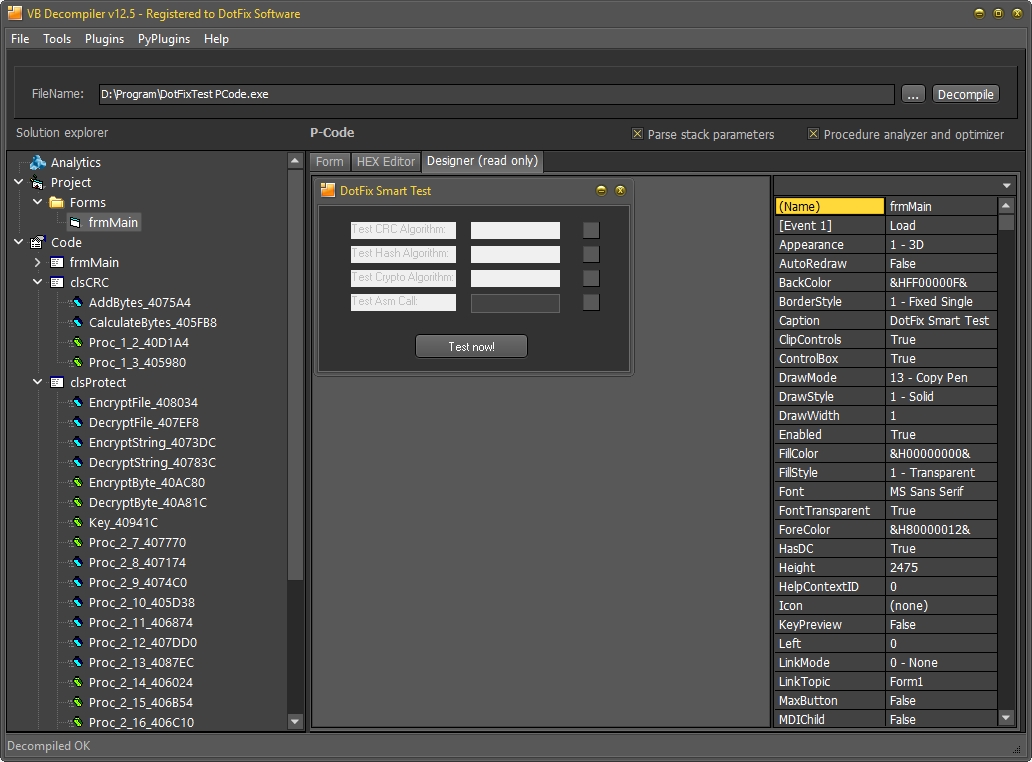

Visual Basic versions 5.0 and 6.0 (they are very similar, although they use different versions of Framework library code, so I will refer to them as one language and compiler from here on) support two types of compilation - into interpretive pseudo-code (known as P-Code) and x86 assembler machine code (known as Native Code). Both variants produce an EXE file. Externally, you cannot distinguish between P-Code and Native Code until you attempt to look inside or try to decompile it. VB Decompiler immediately displays how the program was compiled upon opening and analyzing the file. Therefore, you can use VB Decompiler, including for determining the type of compilation of your file. The difference between these types of compilation is colossal. In the case of P-Code - an interpreted language containing up to 1400 opcodes of the virtual machine. This is not a collection of primitive mathematical or logical operation commands that could potentially be recovered using an AI model. Mostly, this involves working with COM objects: early and late binding when creating objects, accessing fields and methods of previously created objects by name, reference, interface via the virtual table (VTable), and DispatchID identifiers. In addition to P-Code itself, this requires parsing a massive number of metadata tables. Tables that are not documented anywhere. Tables that form part of a closed format.

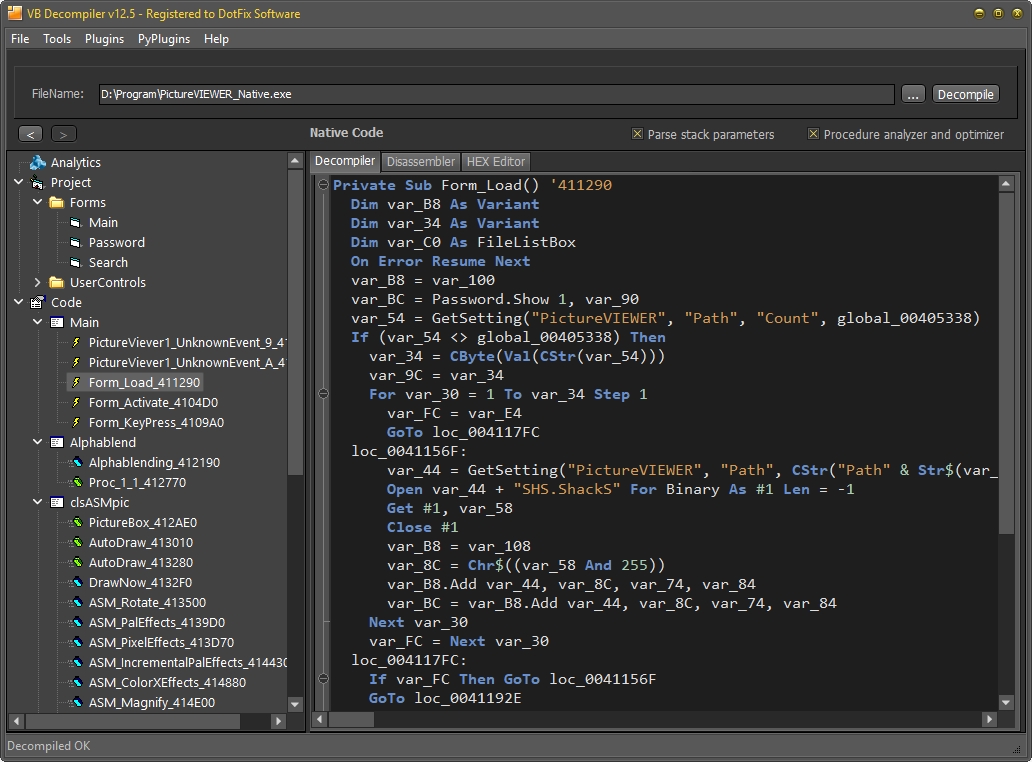

What about Native Code? It is both simpler and more complex at the same time. Simpler because instead of up to 1400 opcodes of the virtual machine, we have fewer than 700 API functions in MSVBVM60.DLL (or MSVBVM50.DLL for Visual Basic 5.0). More complex because it is not a sequence of P-code instructions that can be step-by-step interpreted and combined. It's an optimized machine code. And with different optimization options, the code is generated differently as well. Furthermore, unlike P-Code, we never know in advance what memory address a simple addition, subtraction, or logical operation command might refer to. This could be anything from shifting a one-dimensional array to shifting a VTable (virtual method table). It could also involve stack or data alignment. Anything is possible. For the decompilation of Native Code in VB Decompiler, we had to develop a static machine code emulator that, step-by-step, virtually executes the function's code and at any given moment contains a snapshot of the contents of all registers, stack of the processor and mathematical coprocessor, program variables, etc. However, even this sometimes fails, especially when COM objects are created in one function, then used in another, and all this happens in real-time using late binding principles. I have been working on developing a Native Code decompiler for 20 years now, and even after all this time, it is far from complete, and the percentage of code recovery from Native Code programs by VB Decompiler stands at around 75%.

Inability to use AI models to decompile Visual Basic 5.0 and 6.0 applications

In summary, AI models cannot effectively decompile Visual Basic 5.0 and 6.0 applications due to their reliance on COM OLE objects, external components, and memory addresses with unknown assignments. GPT models can only assist in highly specialized algorithmic tasks but are not applicable for decompiling object-oriented languages like Visual Basic that heavily rely on metadata tables.

Conclusions

When can an AI model be helpful? In cases where the programming task needs to be solved and the method of solving this task is irrelevant. However, as previously mentioned, it's more efficient to create a technical brief for the AI model instead of providing compiled EXE files from old programs. This results in cleaner and more modern code. In simpler terms, artificial intelligence can already write decent code tailored to your tasks. But unfortunately, AI is currently unable to reconstruct pseudocode or machine code into source code because it requires parsing a huge amount of external metadata, the format of which is closed and unknown to AI models.

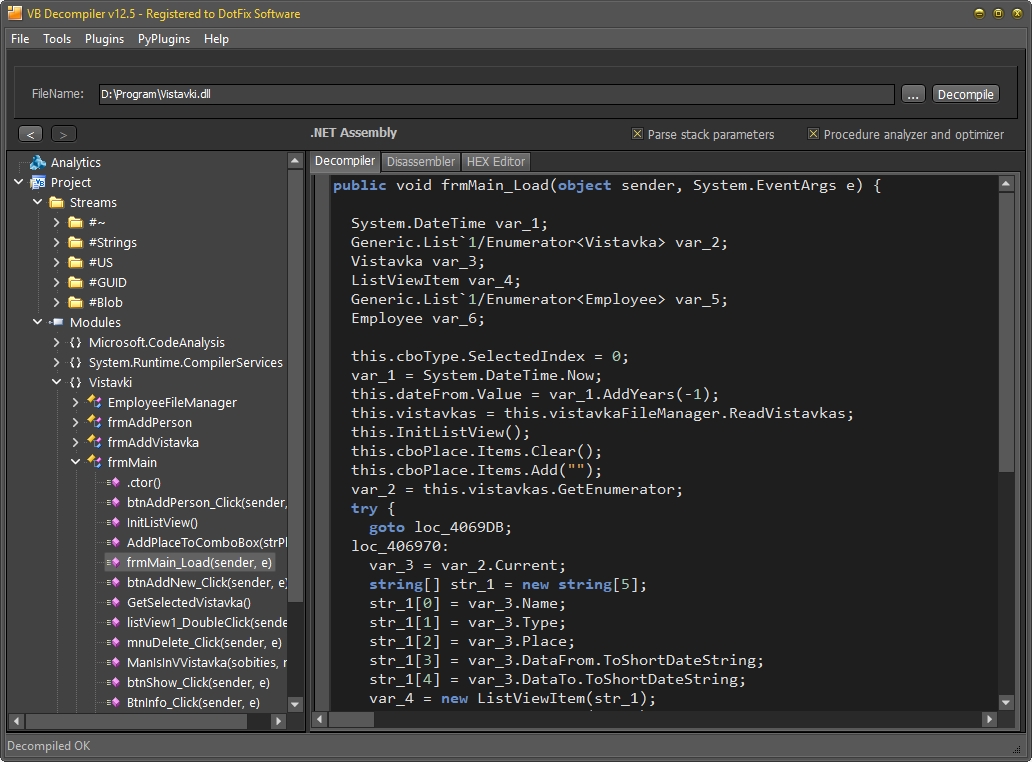

When is a decompiler like VB Decompiler needed? In all cases where analyzing the exact code within an exe file, rather than similar code generated by AI models, is required. This includes analyzing malicious files in anti-virus laboratories to determine their functionality and forensics analysis of programs for traces, backdoors, and hidden functions. It also involves recovering closed algorithms representing know-how without using the internet or external big data servers. VB Decompiler meticulously examines all embedded metadata tables within the file and virtually emulates the code (without running it on real hardware) to attempt to reconstruct as much of the original code as possible. While the recovered code may not be perfect, it will accurately reflect the initial logic of the program developed by its creator. There will be no code invented by the AI model, simply because there was not enough data to form a complete picture of the model, as is usually the case with AI assistants. The code will be exactly what is present in the file. If a particular line cannot be correctly decompiled, you will see UnknownCall or something similar instead of AI guesses. From an algorithm research perspective, this is the most honest and correct approach.

In general, I recommend using all available methods and tools for any task at hand. It's crucial to understand each method's limitations and take them into account in your work. Good luck with your research!

March 20, 2025

(C) Sergey Chubchenko, VB Decompiler's main developer